IT Tip & Insights: A Softensity software engineer walks you through the features and capabilities of Azure Data Factory.

By Rabby Hasan, Software Engineer

What is Azure Data Factory?

Azure Data Factory (ADF) is a data processing service of azure. It supports ETL (extract transform load), ELT (extract load transform), and SSIS package data integration. It is an independent cloud-based service where you can run services pay-as-you-go. You can transform your data from various sources into various destinations. Essentially, ADF is a data processing factory where you can process your data from different sources.

When to use Azure Data Factory service?

- Copying data from one data source to another

- Transform data from a different source

- Data migration pipeline for code-free migration

- Template-based support

- Run SSIS package in Azure SQL

- Schedule SSIS package in Azure SQL

- Pipeline support

- And more …

Getting Started

Before getting started with ADF, you need to create a Data Factory service in azure. To do so:

- Find Data Factories in portal.azure.com

- Create Data Factory

- Open Azure Data Factory Studio

Main Features

- Ingest

- Orchestrate

- Transform Data

- SSIS

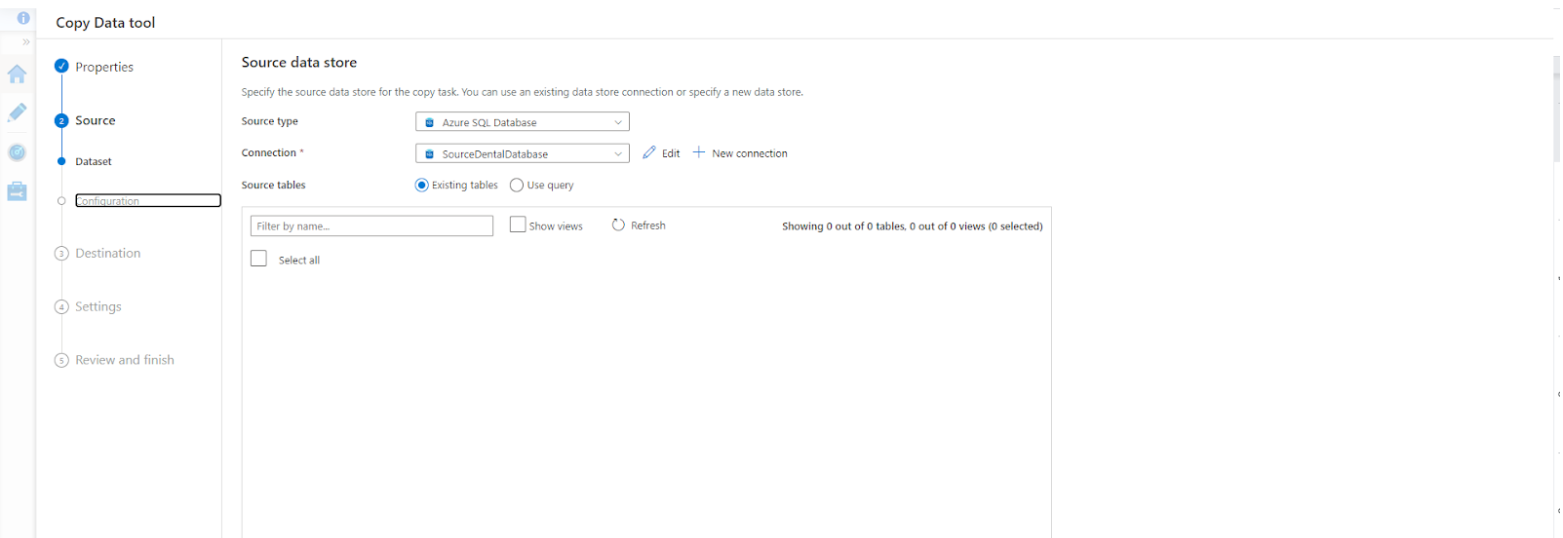

Ingest

Ingestion is the process a data tool uses to copy data from one or more sources into a destination. It will load tables from the source to copy data towards the destination.

- Create source connection

- All datasets will be loaded for preview

- Create a destination connection

- Map tables and columns with the destination

- Run and view progress

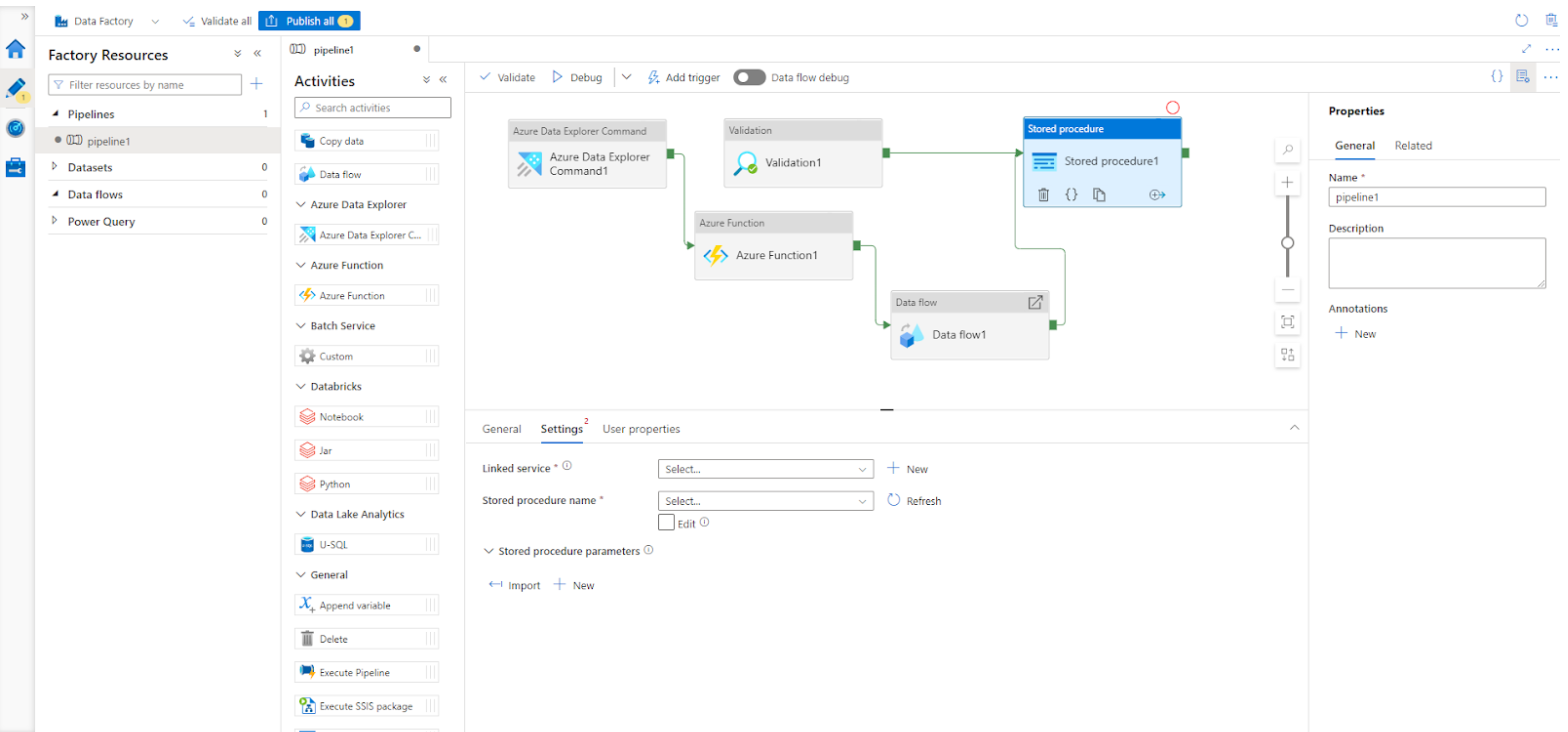

Orchestrate

Orchestration is another powerful feature of ADF. In orchestration, we can create a pipeline using various types of activities. We can also create visual data flow based on various activities. Activities have the following features, and each activity has activity-specific configurations:

- Move & Transform

- Azure Data Explorer

- Azure Function

- Custom Service

- Databricks & Data lake

- HDInsight for big data

- Iteration & conditional

- Machine Learning

- Power Query

There are common processes to orchestrate data:

- Create and validate data flow

- Configure datasets

- Run & view progress

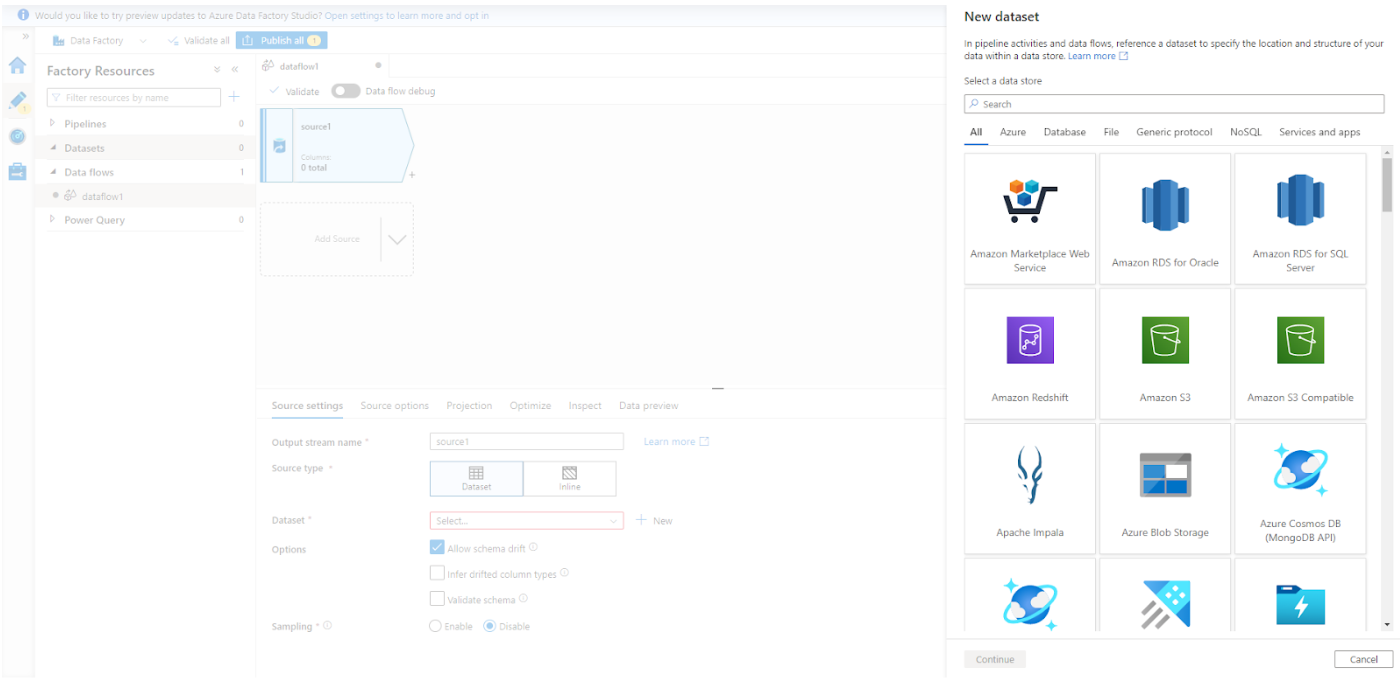

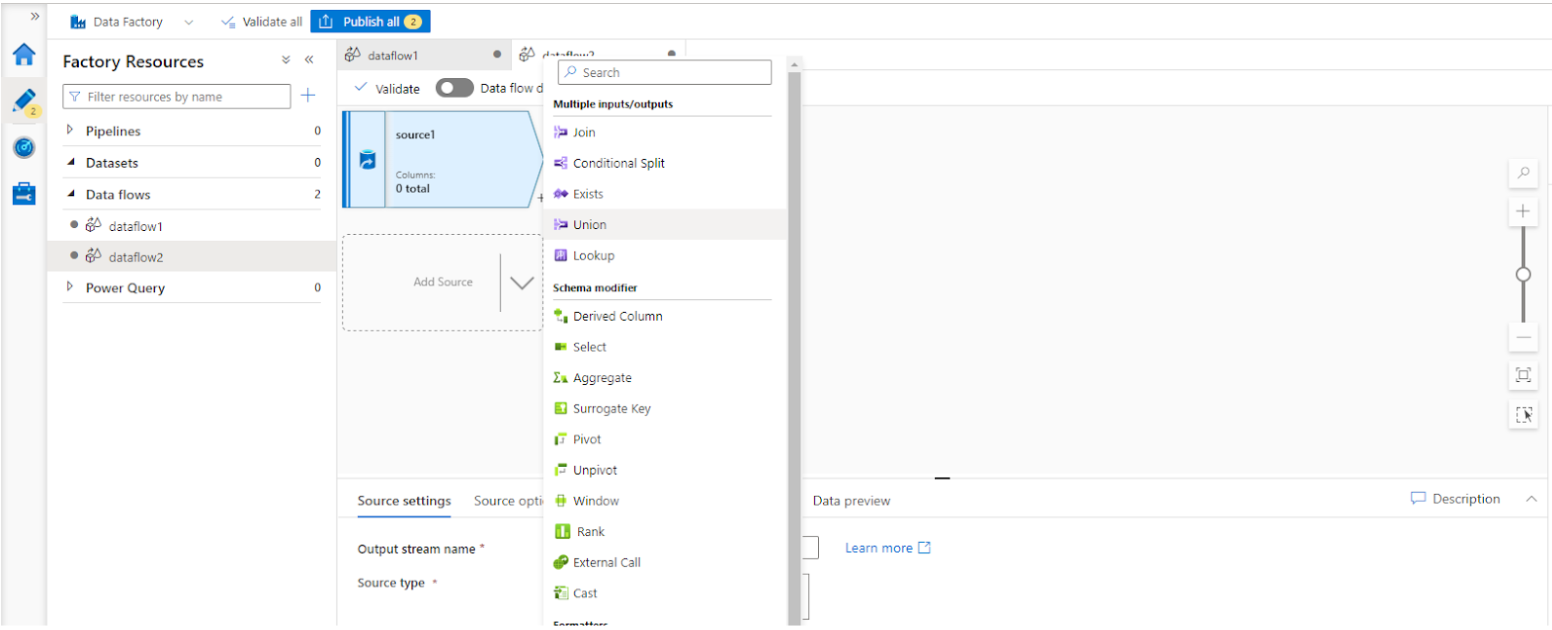

Transform

Transformation of data is another delightful feature of ADF. Any data can be transformed using tons of transformation features. In transformation, data can be modified by joining, union, aggregation, pivoting, and many more.

The process to transform data:

1. Choose a dataset and create a connection with the datasets

2. Create dataflow and configure sources from datasets

3. Add transformations into data

4. You can add a power query

5. Publish and view the progress of transformation.

SSIS

Azure SQL does not support SSIS natively. ADF is used to run the SSIS package into Azure SQL. Integration Services can extract and transform data from a wide variety of sources such as XML data files, flat files, and relational data sources, and then load the data into one or more destinations.

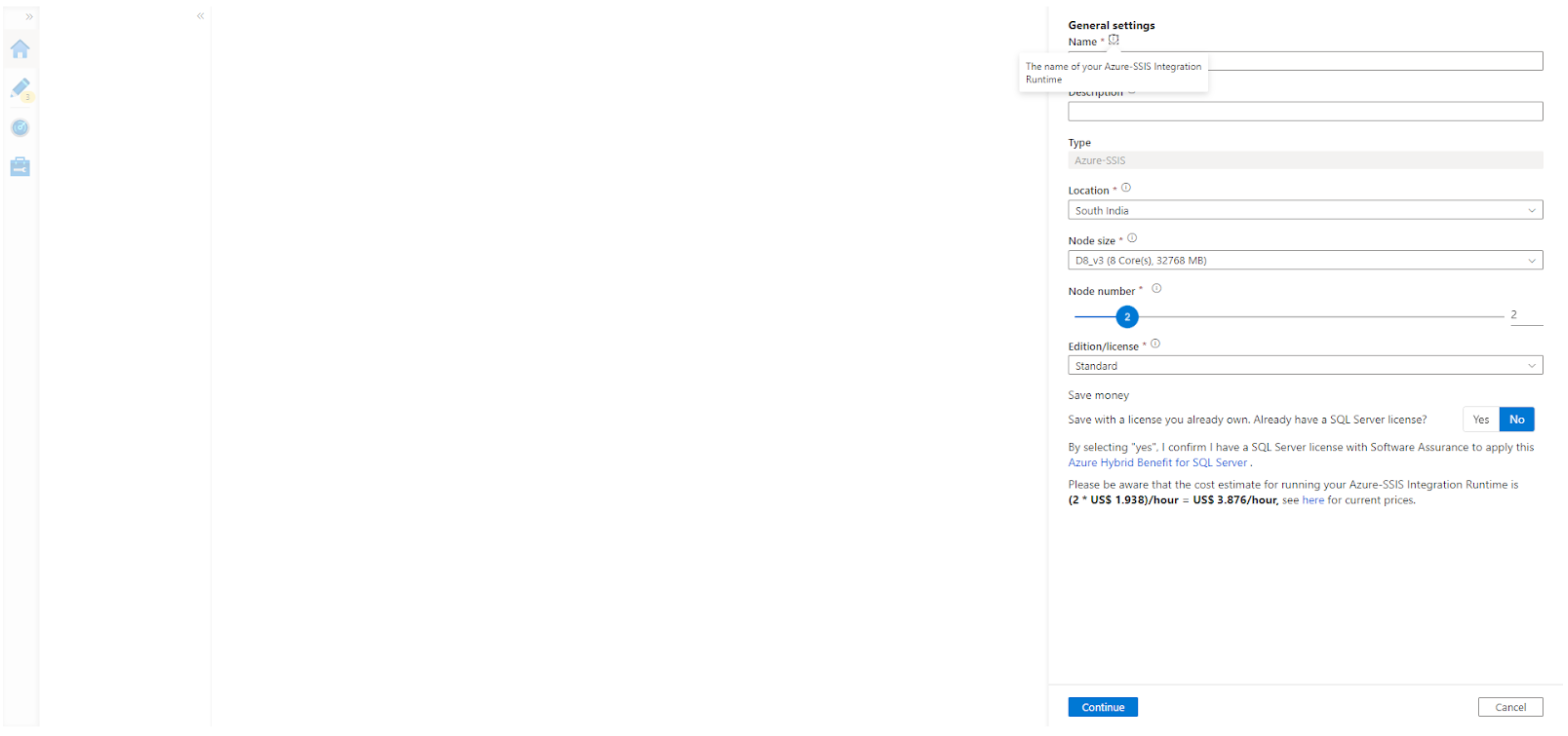

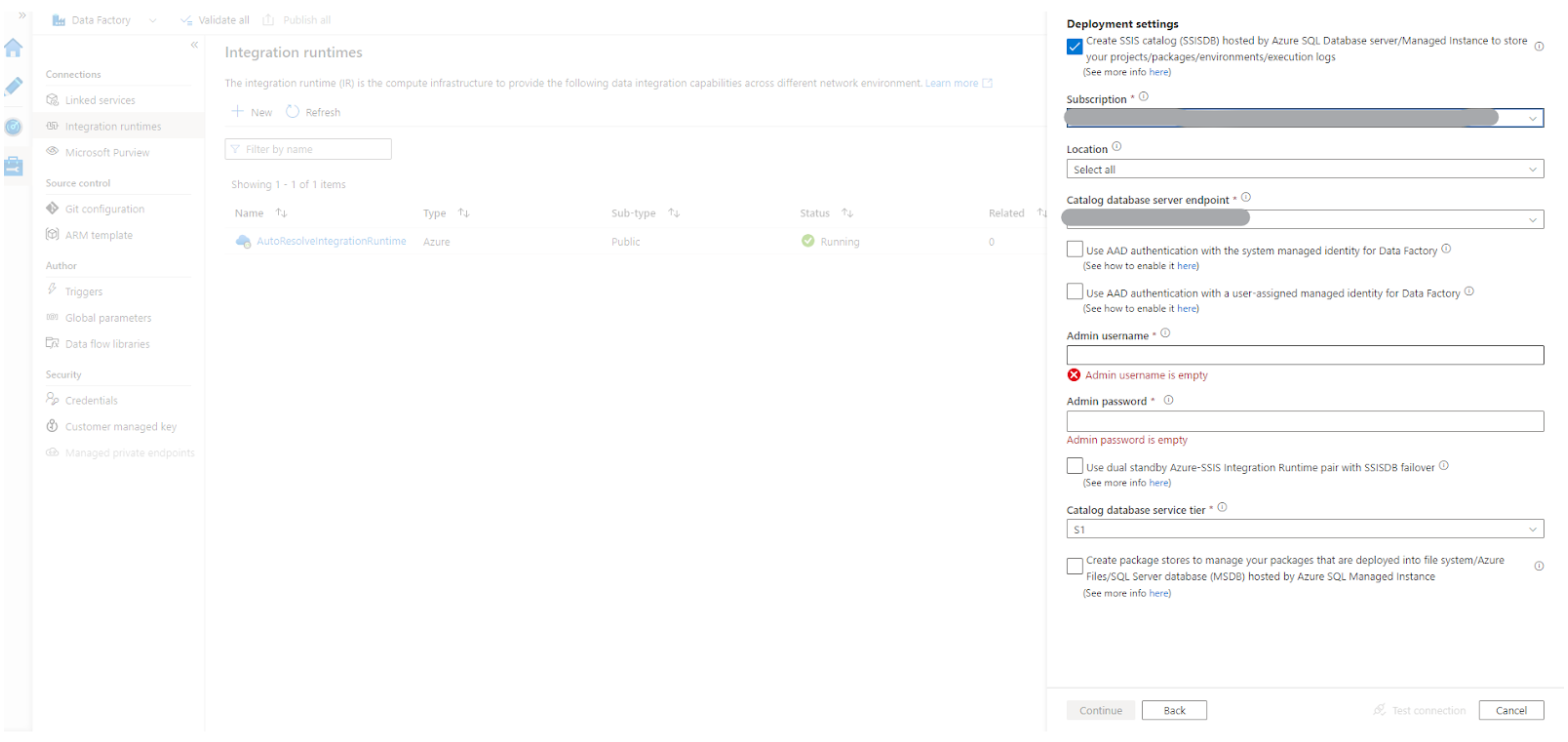

1. Configure SSIS

2. Setup integration runtime settings (Create SSIS DB)

3. Deploy run and monitor packages

4. Scheduling SSIS

Conclusion

ADF now has the full support of version control. You can control your ADF setup and environment using the git repository. In conjunction with the ARM template and Azure DevOps, you can deploy your ADF with the azure DevOps CI pipeline. Learn more in this article by Softensity Data Engineer, Fernando Hubner.

About

Hey good people! My name is Rabby Hasan. I am from Bangladesh and I have 6.5 years of experience in .Net development. I also have experience working with the latest frontend technologies like Vue and Angular as well as with Azure. I am a Microsoft Certified Solution Developer.

Join Our Team